- Published on

LLama 3.1 - why 9x different in pricing?

- Authors

- Name

- AbnAsia.org

- @steven_n_t

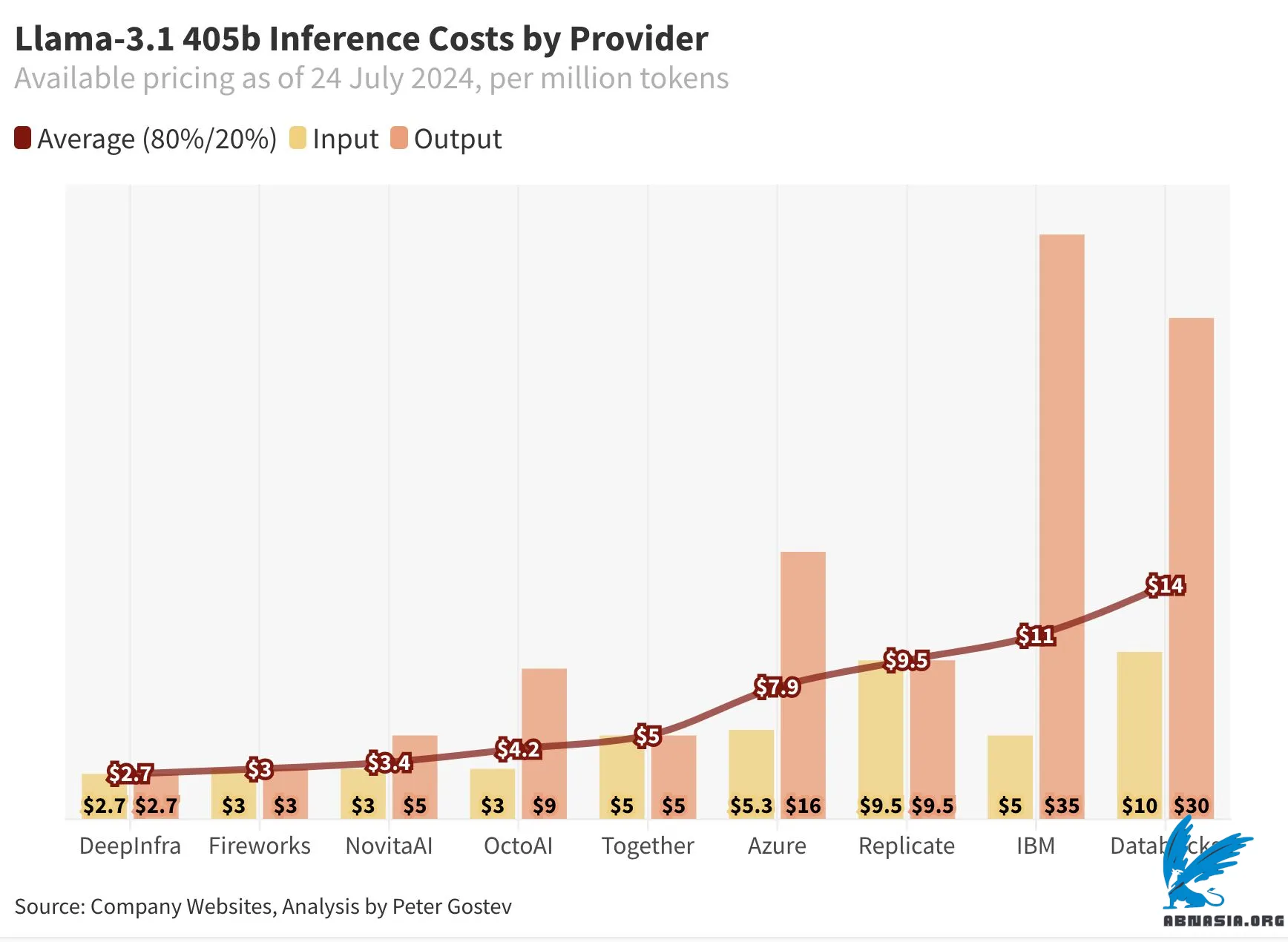

"Llama-3.1 405b has been out for about a day and we saw a wild difference in prices (9x more expensive so far) - between 14 per million tokens.

Note: this is an updated chart to correct IBM pricing, previously showed 5 input and $35 output.

Companies like Azure is the most interesting, since they are the biggest provider listed - there's no pricing that I could find for AWS or Google Vertex, so if Azure is the right benchmark to look at, the pricing is around what GPT-4o is priced.

For the discussion context, some proprietary model pricing:

GPT-4o: 15m output

GPT-4o-Mini: 0.6 output

Claude Sonnet 3.5: 15 output

To calculate the average, I have assumed 80% input and 20% output weightings.

Note that not all providers are disclosing whether they are limiting the models in any way - e.g. sometimes providers could limit the context length and instead of native 128k you can get 32k. It is possible that some are quantised, but I haven't seen that being explicitly called out yet. This also doesn't take into account rate limits or speed - other important factors."

Author

DIGITIZING ASIA, ABN ASIA was founded by people with deep roots in academia, with work experience in the US, Holland, Hungary, Japan, South Korea, Singapore, and Vietnam. ABN Asia is where academia and technology meet opportunity. With our cutting-edge solutions and competent software development services, we're helping businesses level up and take on the global scene. Our commitment: Faster. Better. More reliable. In most cases: Cheaper as well.

Feel free to reach out to us whenever you require IT services, digital consulting, off-the-shelf software solutions, or if you'd like to send us requests for proposals (RFPs). You can contact us at [email protected]. We're ready to assist you with all your technology needs.

© ABN ASIA