- Published on

A new method to delete 40% of LLM layers with no drop in accuracy

- Authors

- Name

- AbnAsia.org

- @steven_n_t

Researchers just developed a new method to delete 40% of LLM layers with no drop in accuracy.

This makes models much cheaper and faster to use.

The method combines pruning, quantization and PEFT which was tested across various open source models.

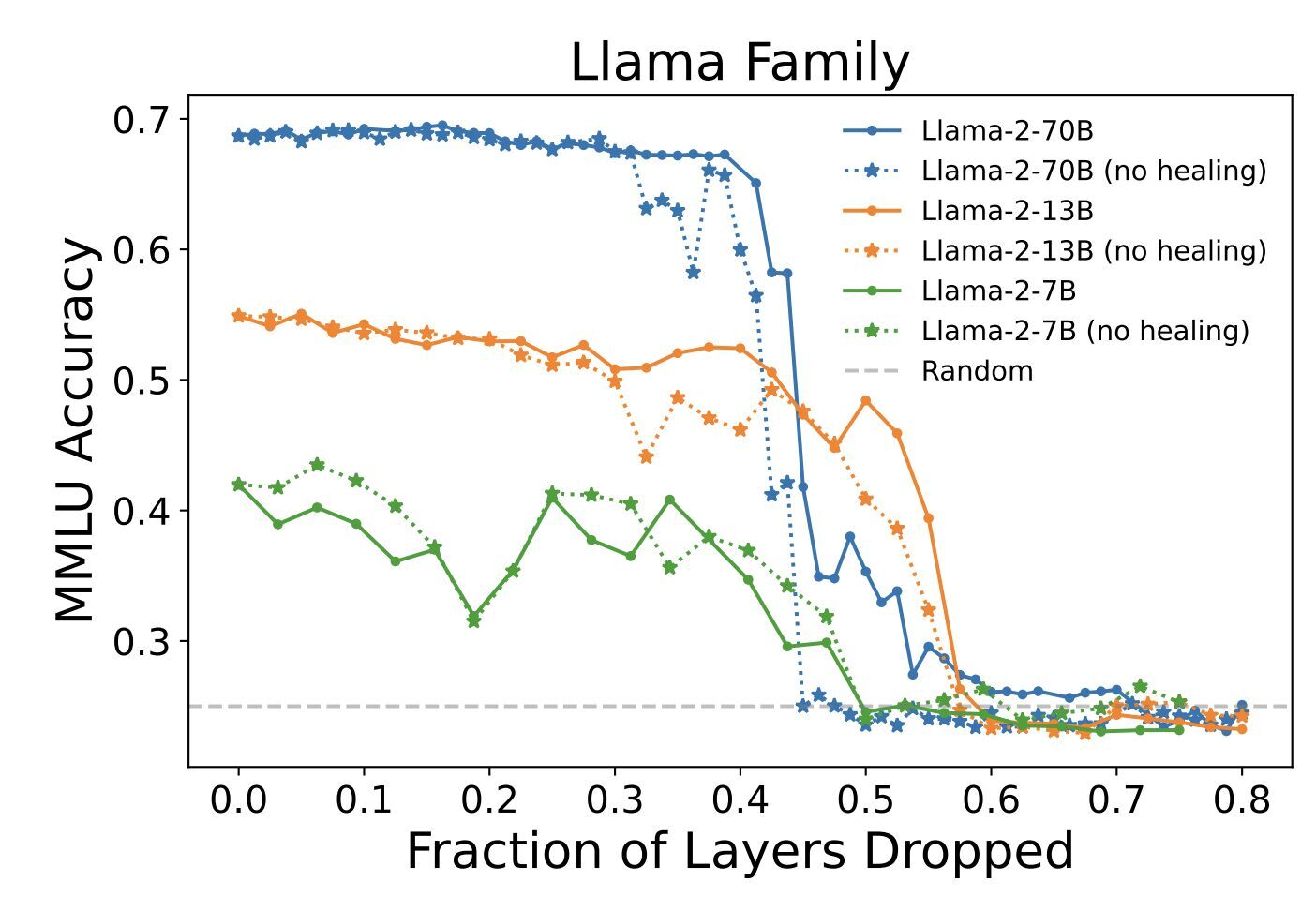

Each family of models had a maximum amount of layers that could be deleted before accuracy dropped:

Mistral - 30%

Llama 70B - 40%

Llama 13B - 50%

Author

Ai Backbone Network (ABN), ABN ASIA was founded by people with deep roots in academia, with work experience in the US, Holland, Hungary, Japan, South Korea, Singapore, and Vietnam. ABN Asia is where academia and technology meet opportunity. With our cutting-edge solutions and competent software development services, we're helping businesses level up and take on the global scene. Our commitment: Faster. Better. More reliable. In most cases: Cheaper as well.

Feel free to reach out to us whenever you require IT services, digital consulting, off-the-shelf software solutions, or if you'd like to send us requests for proposals (RFPs). You can contact us at [email protected]. We're ready to assist you with all your technology needs.

© ABN ASIA