- Published on

LLMOps: A Friendly Introduction

- Authors

- Name

- AbnAsia.org

- @steven_n_t

So, you heard the buzz around LLMOps from your friends or colleagues and are wondering what the fuss is all about.

🚀 LLMOps: A Friendly Introduction

So, you heard the buzz around LLMOps from your friends or colleagues and are wondering what the fuss is all about.

Let’s dig in.

First, What is it?

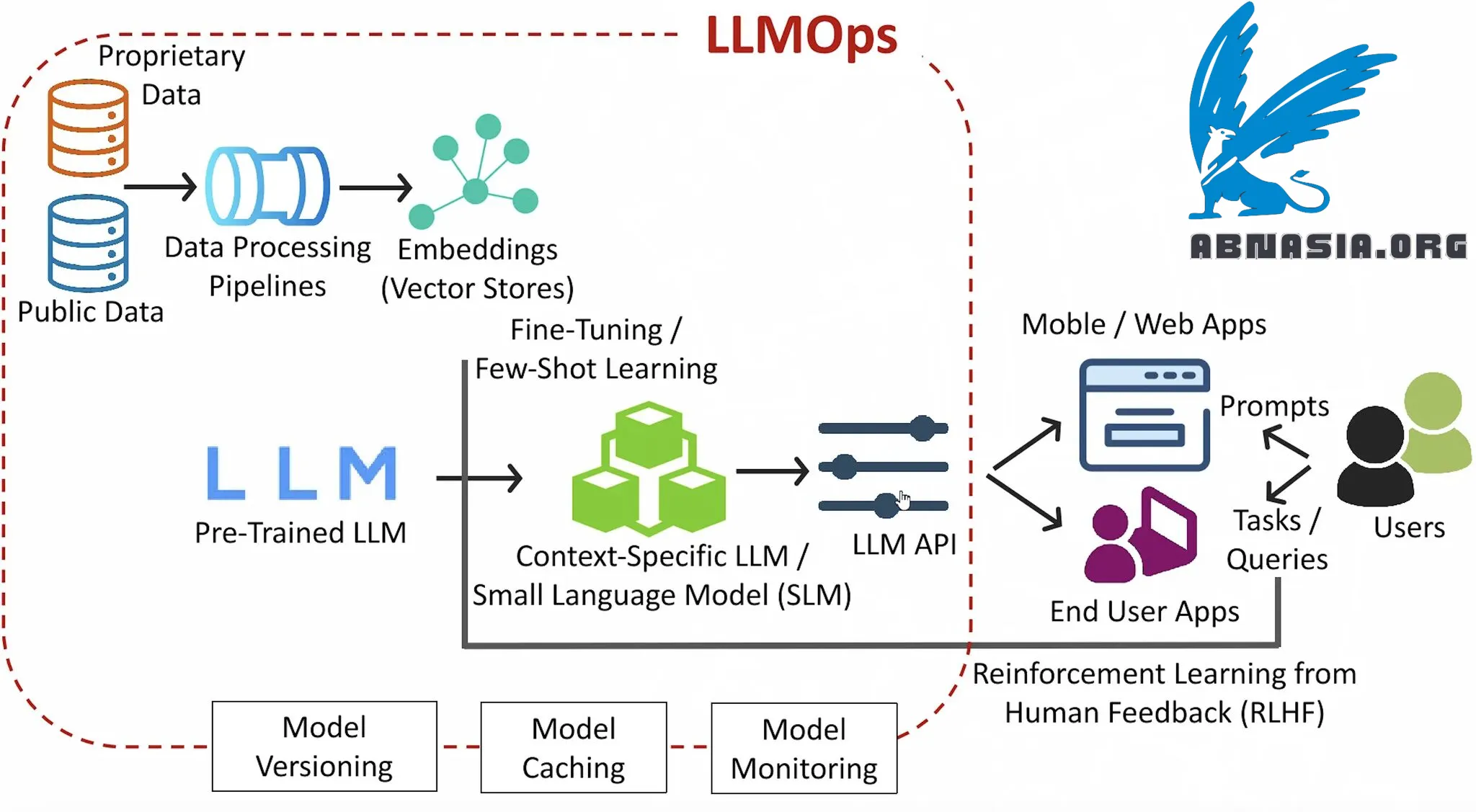

Think of it as the next evolution of MLOps, which in itself was an evolution of DevOps tailored for ML.

MLOps is further tailored specifically to handle the challenges of working with large language models.

Think of it like this:

DevOps → MLOps → LLMOps

So it has CI/CD pipelines and model monitoring, plus more, to manage LLM-specific tasks like prompt engineering and human feedback loops.

Why is it Needed?

Ah. LLMs are complex. Much more complex than classical ML models. They need special tooling, and hence LLMOps is essential:

- LLMs are massive: Running them efficiently requires careful planning of computational resources like GPUs or TPUs.

- LLMs aren't just models: They require additional tools like vector databases.

- Training and serving LLMs is $$$$: More eyes and care are needed to make them cost-efficient.

So, What All Falls Under It?

Prompt Engineering

- Since LLMs are heavily influenced by how you ask questions (prompts), managing prompts involves tracking and optimizing them for the best results.

- Tools like LangChain or MLflow can help streamline this process.

Deployment & Scalability

- Deploying LLMs isn’t the same as deploying smaller models. You need to deal with massive loads on GPUs/TPUs.

Cost-Performance Trade-offs

- LLMOps involves balancing latency, performance, and cost. Techniques like fine-tuning smaller models or using parameter-efficient tuning (e.g., LoRA) can help.

Human Feedback Integration

- Feedback loops are essential for improving model responses. Reinforcement Learning with Human Feedback (RLHF) is part of the LLMOps workflow.

Monitoring and Testing

- Testing LLMs involves more than traditional accuracy metrics. Monitoring must capture bias, hallucination rates, and much more.

Model Packaging

- Models need to be standardized for seamless deployment across various systems.

How Can I Get Into LLMOps?

Technical Foundations

- Machine Learning Fundamentals: Understand model training, evaluation, and deployment.

- Programming: Python is a must, along with familiarity with libraries like TensorFlow, PyTorch, or Hugging Face.

LLM-Specific Knowledge

- Prompt Engineering: Learn how to structure inputs for optimal LLM performance.

- Fine-Tuning: Master lightweight fine-tuning methods like LoRA or adapters.

MLOps Expertise

- Know the tools like Docker, Kubernetes, MLflow, etc.

Vector Stores

- Familiarity with vector databases like Pinecone, Weaviate, etc., is becoming essential for LLM applications.

Communication & Collaboration

- LLMOps is cross-disciplinary. You’ll work with data scientists, product managers, and engineers, so strong communication skills are a bonus.

That’s it!

Author

AiUTOMATING PEOPLE, ABN ASIA was founded by people with deep roots in academia, with work experience in the US, Holland, Hungary, Japan, South Korea, Singapore, and Vietnam. ABN Asia is where academia and technology meet opportunity. With our cutting-edge solutions and competent software development services, we're helping businesses level up and take on the global scene. Our commitment: Faster. Better. More reliable. In most cases: Cheaper as well.

Feel free to reach out to us whenever you require IT services, digital consulting, off-the-shelf software solutions, or if you'd like to send us requests for proposals (RFPs). You can contact us at [email protected]. We're ready to assist you with all your technology needs.

© ABN ASIA