- Published on

The speed of change in Ai. Amazingly Fascinating.

- Authors

- Name

- AbnAsia.org

- @steven_n_t

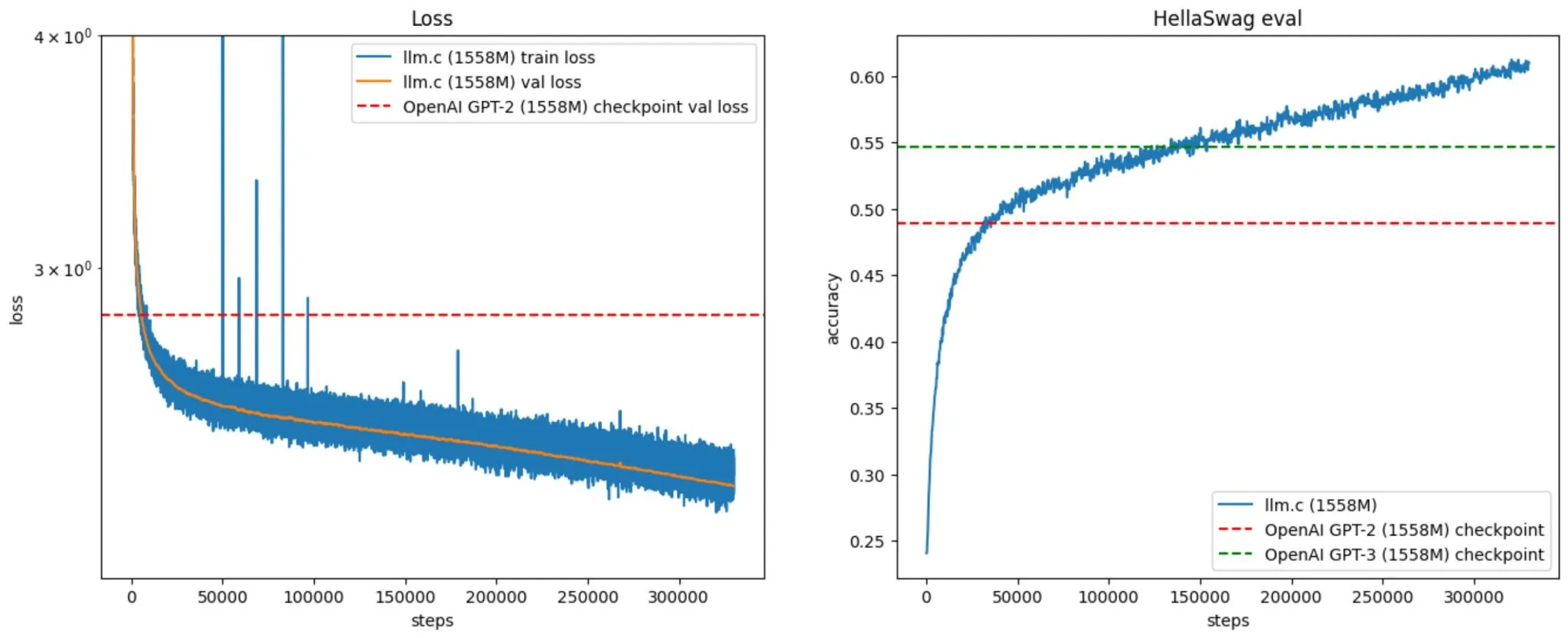

"""In 2019, OpenAI announced GPT-2. Today (~5 years later) you can train your own for ~$672, running on one 8XH100 GPU node for 24 hours.""

The costs have come down dramatically over the last 5 years due to improvements in compute hardware (H100 GPUs), software (CUDA, cuBLAS, cuDNN, FlashAttention) and data quality (e.g. the FineWeb-Edu dataset).

Because llm.c is a direct implementation of GPT training in C/CUDA, the requirements are minimal - there is no need for conda environments, Python interpreters, pip installs, etc.

You spin up a cloud GPU node (e.g. on Lambda), optionally install NVIDIA cuDNN, NCCL/MPI, download the .bin data shards, compile and run, and you're stepping in minutes.

You then wait 24 hours and enjoy samples about English-speaking Unicorns in the Andes."

Author

AiUTOMATING PEOPLE, ABN ASIA was founded by people with deep roots in academia, with work experience in the US, Holland, Hungary, Japan, South Korea, Singapore, and Vietnam. ABN Asia is where academia and technology meet opportunity. With our cutting-edge solutions and competent software development services, we're helping businesses level up and take on the global scene. Our commitment: Faster. Better. More reliable. In most cases: Cheaper as well.

Feel free to reach out to us whenever you require IT services, digital consulting, off-the-shelf software solutions, or if you'd like to send us requests for proposals (RFPs). You can contact us at [email protected]. We're ready to assist you with all your technology needs.

© ABN ASIA