- Published on

Machine Learning Models: Model Compression Methods

- Authors

- Name

- AbnAsia.org

- @steven_n_t

Why? Because they are way too big now.

Not too long ago, the largest Machine Learning models most people would deal with merely reached a few GB in memory size. Now, every new generative model coming out is between 1B and 1T parameters! To get a sense of the scale, one float parameter, that's 32 bits or 4 bytes (or 2 bytes with Float16), so those new models can scale between 4 GB to 4 TB in memory, each running on expensive hardware. And during the backpropagation algorithm, those models can need as much as 10 times this amount of memory. Because of the massive scale increase, there has been quite a bit of research to reduce the model size while keeping performance up. There are 5 main techniques to compress the model size.

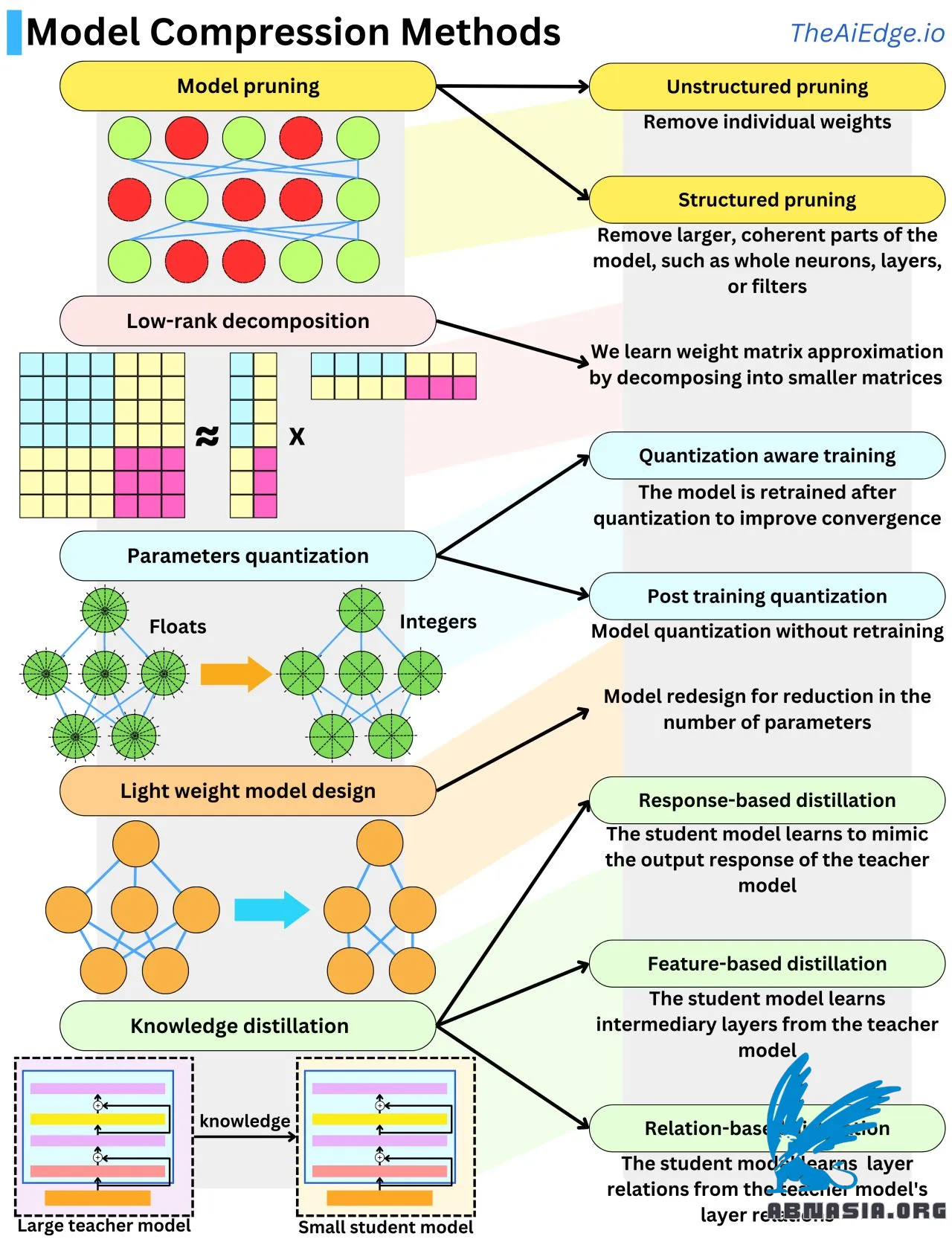

Model pruning is about removing unimportant weights from the network. The game is to understand what "important" means in that context. A typical approach is to measure the impact on the loss function of each weight. This can be done easily by looking at the gradient and second-order derivative of the loss. Another way to do it is to use L1 or L2 regularization and get rid of the low-magnitude weights. Removing whole neurons, layers, or filters is called "structured pruning" and is more efficient when it comes to inference speed.

Model quantization is about decreasing parameter precision, typically by moving from float (32 bits) to integer (8 bits). That's 4X model compression. Quantizing parameters tends to cause the model to deviate from its convergence point, so it is typical to fine-tune it with additional training data to keep model performance high. We call this "Quantization-aware training". When we avoid this last step, it is called "Post training quantization", and additional heuristic modifications to the weights can be performed to help performance.

Low-rank decomposition comes from the fact that neural network weight matrices can be approximated by products of low-dimension matrices. A N x N matrix can be approximately decomposed into a product of 2 N x 1 matrices. That's an O(N^2) -> O(N) space complexity gain!

Knowledge distillation is about transferring knowledge from one model to another, typically from a large model to a smaller one. When the student model learns to produce similar output responses, that is response-based distillation. When the student model learns to reproduce similar intermediate layers, it is called feature-based distillation. When the student model learns to reproduce the interaction between layers, it is called relation-based distillation.

Lightweight model design is about using knowledge from empirical results to design more efficient architectures. That is probably one of the most used methods in LLM research.

Author

DIGITIZING ASIA, ABN ASIA was founded by people with deep roots in academia, with work experience in the US, Holland, Hungary, Japan, South Korea, Singapore, and Vietnam. ABN Asia is where academia and technology meet opportunity. With our cutting-edge solutions and competent software development services, we're helping businesses level up and take on the global scene. Our commitment: Faster. Better. More reliable. In most cases: Cheaper as well.

Feel free to reach out to us whenever you require IT services, digital consulting, off-the-shelf software solutions, or if you'd like to send us requests for proposals (RFPs). You can contact us at [email protected]. We're ready to assist you with all your technology needs.

© ABN ASIA